From Cost Center to Value Creator

This is part 1 of a 3-part series on AI governance.

- Part 1: Introduces AI governance and reframes the conversation from cost center to value creator

- Part 2: Discusses implementing AI governance for competitive advantage

- Part 3: Outlines the strategic imperatives for successful AI governance

Introduction

As companies begin to deploy both predictive and generative AI technologies, they need to understand the probabilistic nature of these algorithms and their inherent propensity to confabulate information. In my article, The Illusion of Control: Generative AI and the CIO’s Dilemma, I shared a couple of recent headlines about chatbots going awry at car dealerships.[1] Well, surprisingly (not), Air Canada’s chatbot made up a refund policy and was forced by the courts to honor the made-up policy. I have to give them credit, though; they made a novel argument based on the premise that they shouldn’t be liable for any of the chatbot’s musings.[2] Thankfully, the courts saw through their BS and forced them to pay up. What befuddles me is why they didn’t simply pay the few hundred Canadian dollars for the bereavement flight. Was it really worth the negative publicity?

Given the twelve hidden risks of ChatGPT and generative AI, what precautions can we take to mitigate them?[3] In this day and age, consumers and stakeholders demand transparency, ethical considerations, and accountability in how AI is designed, deployed, and used. If a company loses consumer trust–your organization may suffer long-term, irreversible damage. Although not related to AI, look at the troubles Boeing is currently facing.[4] This reality poses a challenging question for business leaders: “In a world where trust in technology is as crucial as the technology itself, could AI governance be your next competitive advantage?”

Traditionally, AI governance is viewed through the lens of risk management and regulatory compliance—necessary safeguards to prevent misuse and ensure that AI acts in accordance with legal requirements. However, as companies become more reliant on AI technology, so too does the strategic significance of AI governance. It is no longer just a set of guidelines to avoid fines–it can become a lever for competitive differentiation. By prioritizing responsible AI practices, organizations can align with current regulations and ethical standards and position themselves as trustworthy digital leaders.

This article aims to shift the perspective on AI governance from a basic compliance checklist to a strategic competitive advantage. I’ll examine how adaptive AI governance can mitigate risks associated with AI while simultaneously unlocking opportunities for growth, innovation, and building customer trust. By taking a holistic approach to AI governance, savvy businesses can set the market with new industry standards, promote a culture of innovation that respects ethical considerations, and create a competitive advantage through trustworthy AI.

From Cost Center to Value Creator

Reframing AI Governance

What is AI governance?

AI governance encompasses the frameworks, policies, and processes organizations establish to ensure their AI systems are safe, fair, ethical, transparent, accountable, explainable (to the extent possible), and uphold human rights. According to Gartner, AI governance involves assigning and assuring organizational accountability, decision rights, risks, policies, and investment decisions for implementing AI.

Many organizations view governance simply as a means to manage risk and ensure compliance. However, some companies have managed to leverage AI governance to set themselves apart. By incorporating AI ethics (often called trustworthy AI) into development and deployment processes, businesses can help minimize risk, improve their brand reputation, build customer loyalty, and spur innovation. This approach shifts AI governance from a cost center to a value creator.

Today, over 60% of consumers look for brands they can trust before they look at price. And their definition of trust has shifted; they expect brands to take an active stand on the issues that matter to them, while the products solve everyday problems. The tried-and-true emotional and aspirational drivers like image and status are taking a backseat to health, family, quality, and social responsibility.[5]

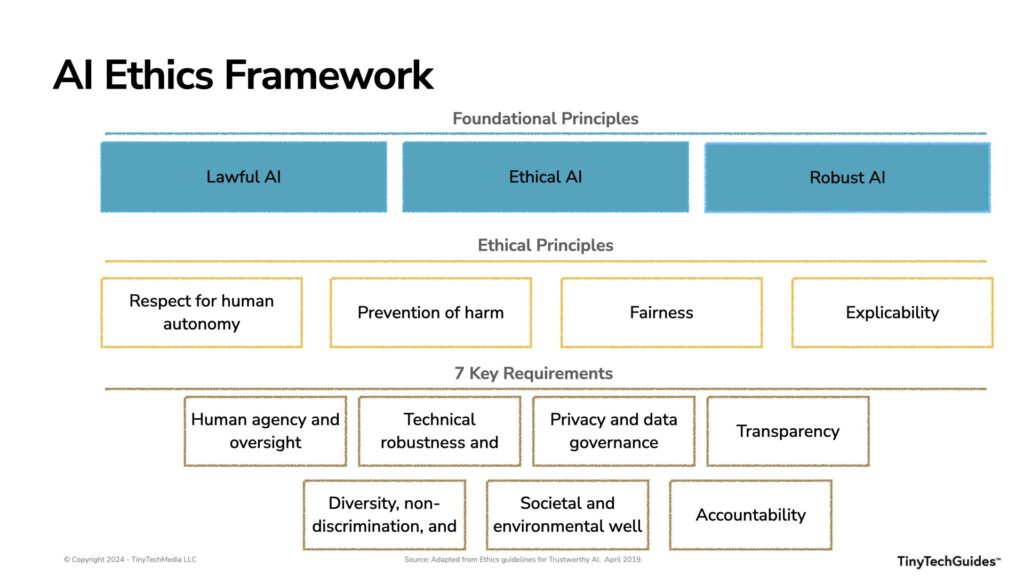

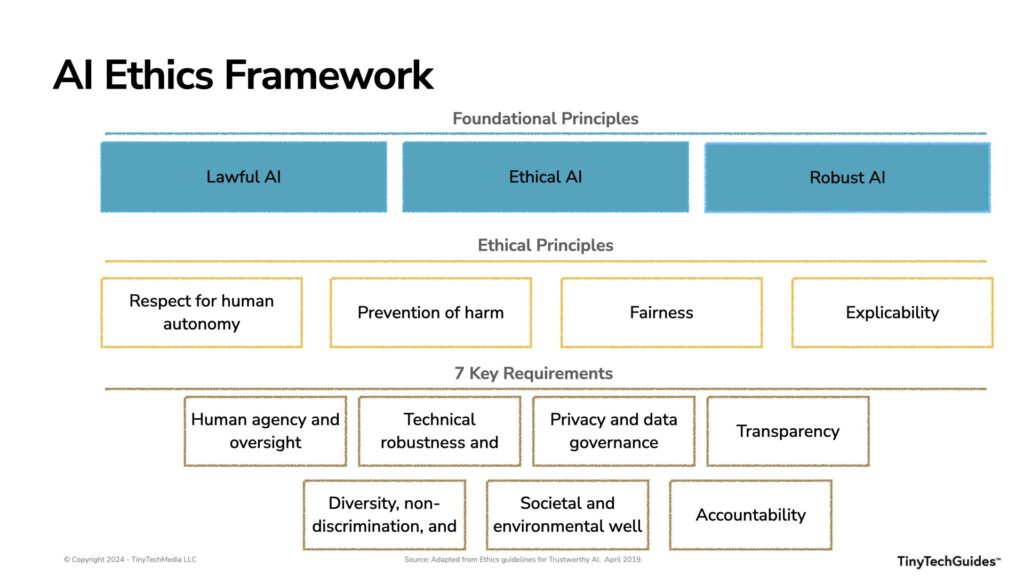

In my article Generative AI Ethics, I shared the following framework to help organizations think through the different principles and requirements.

Figure 1.1: AI Ethics Framework

The Yin and Yang of Trustworthy AI and AI Governance

Many have asked me about the difference between trustworthy AI and AI governance. They’re essentially different sides of the same coin. Trustworthy AI ensures that AI systems are designed, developed, and deployed in a lawful, ethical, and robust manner. By adhering to the Foundational and Ethical Principles in Figure 1.1, organizations can use the seven key AI ethics requirements to develop trustworthy AI. Fundamentally, trustworthy AI can help gain the confidence of users and stakeholders by being transparent about AI’s use in decision-making processes, ensuring fairness, respecting privacy, and being accountable for outcomes.

The relationship between trustworthy AI and AI governance is symbiotic. Effective AI governance frameworks are the foundation upon which trustworthy AI is built. They provide the guidelines and standards for AI development and use, ensuring that AI systems are technically robust and socially responsible. This trustworthiness is essential for businesses seeking to leverage AI, as it directly impacts customer engagement, brand loyalty, and the social license to operate in increasingly AI-driven markets.

Here are a few definitions to clarify the differences:

- AI Ethics: As illustrated in Figure 1.1, AI ethics is concerned with the moral principles guiding the development and use of AI. It involves making decisions about right or wrong in the context of AI systems and their impact on individuals, society, and businesses. Ethical AI encompasses areas such as transparency, fairness, accountability, privacy, and responsible use of AI

- Trustworthy AI: Focuses on the technical implementation of AI to ensure reliability, safety, and transparency. It involves building AI systems that are fair, robust, and secure. Trustworthy AI aims to create systems that can be trusted to behave as expected and relied upon to reach their goals.

- AI Governance: Refers to the legal framework for ensuring responsible research, development, and use of AI technologies. It addresses risk management, stakeholder involvement, decision-making processes, regulatory compliance, and model ownership. AI governance aims to bridge the gap between accountability and ethics in technological advancement.

- AI Guardrails: Focuses on providing guidelines and limits to ensure that AI systems are developed and utilized in a manner that aligns with ethical principles while enabling kill switches and human intervention when unexpected functionality or outcomes occur. Guardrails serve as safety mechanisms to manage potential risks associated with improper use of AI. For more on AI guardrails, see my article The Illusion of Control: Generative AI and the CIO’s Dilemma.[6]

AI Ethics focuses on moral principles guiding AI development and use, and Trustworthy AI centers on technical reliability. AI Governance focuses on the legal framework ensuring responsible AI practices, while AI Guardrails provide guidelines for ethical use of AI systems. These concepts work together to ensure that AI is developed and used in a manner that is technically robust, ethically sound, legally compliant, and aligned with societal values.

Examples of AI Governance in Action

Data, analytics, and AI governance aren’t new–but creating a competitive advantage from it certainly is. Unless they’re in a highly regulated industry, most companies don’t pay much attention to governance. Many companies pay lip service to governance, but most don’t take any meaningful action. “We’re pro AI ethics” is certainly a nice platitude, but putting the necessary focus and resources behind an AI governance program requires a focused and prolonged commitment. However, a few forward-thinking companies have recognized AI governance’s strategic importance and positioned themselves as leaders in this space. For example:

- Big tech companies like Google, Microsoft, and AWS have established comprehensive AI ethics guidelines and have dedicated teams focused on responsible AI development.[7],[8],[9] These companies have helped shape industry standards. They’ve influenced the broader ecosystem by creating products, tools, frameworks, and collaborative research, driving innovation while promoting ethical practices (as long as they align with their corporate interests).

- Financial institutions such as HSBC and JPMorgan Chase have implemented AI governance frameworks that prioritize data privacy, algorithmic transparency, and ethical use of AI in financial decision-making.[10],[11] Their commitment to responsible AI has enhanced customer trust and contributed to more sustainable business models in the competitive financial services sector.

- Healthcare organizations are increasingly adopting AI governance models that address ethical concerns unique to healthcare, including patient consent, data confidentiality, algorithmic bias, and decision-making. Mayo Clinic and other institutions have developed AI governance protocols that not only comply with regulatory standards but also advance patient care, demonstrating the vital role of governance in unlocking the potential of AI in highly regulated environments.[12]

These examples illustrate the importance of robust AI governance, including improved customer trust, enhanced regulatory compliance, and responsible innovation.

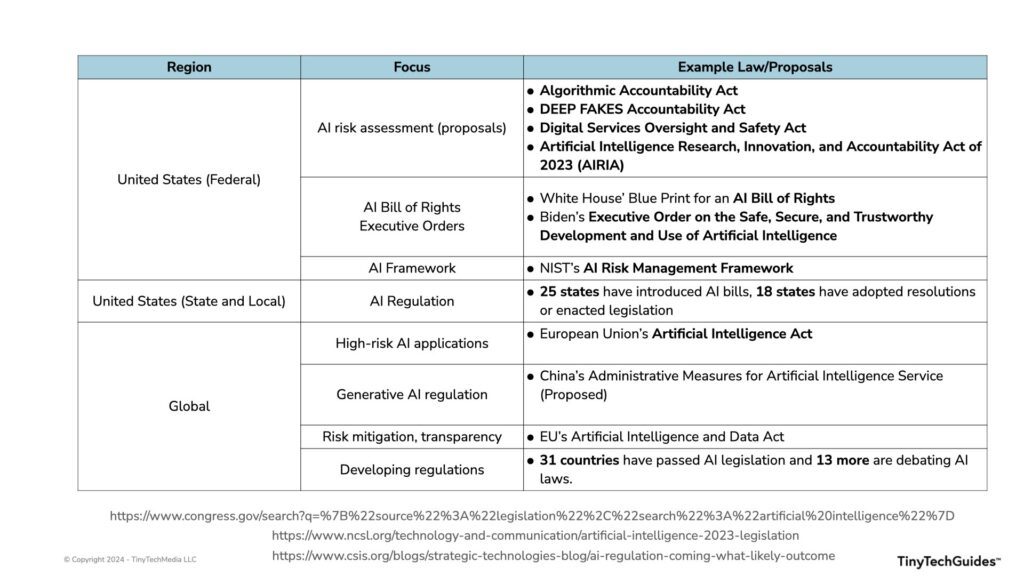

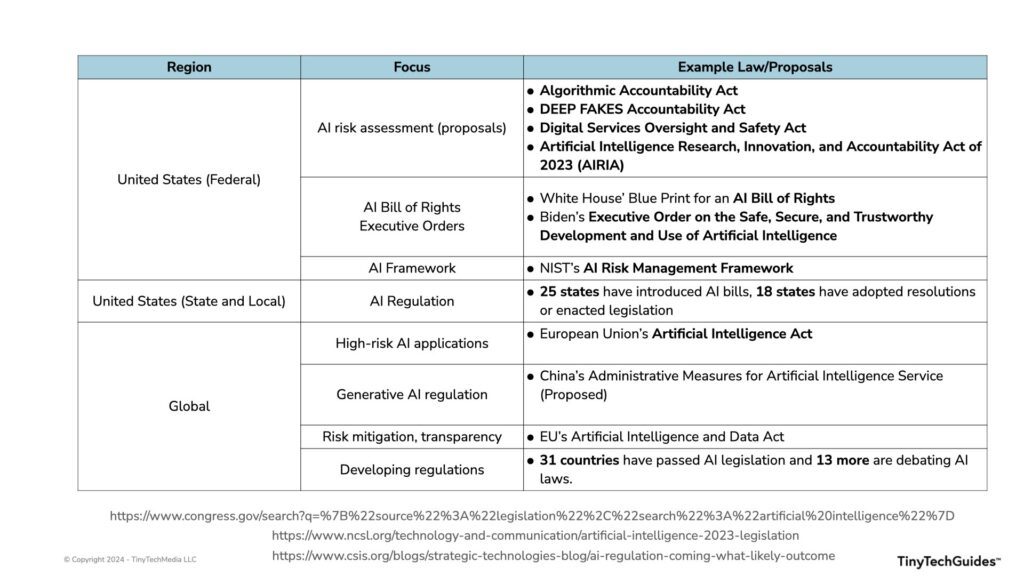

Preparing for Upcoming Regulations

For those who have read my articles Regulating Generative AI, Decoding the AI Bill of Rights, and Generative AI’s Powers and Perils: How Biden’s Executive Order is Reshaping the Tech Landscape, there are a slew of upcoming AI regulations that companies need to prepare for.[13],[14],[15] A sample of these include:

Figure 1.2: Upcoming Regulations[16],[17],[18]

Similar to when GDPR became a reality in the E.U., organizations should now start preparing for these regulations. Those who delay risk fines or potential damage from their lack of compliance.

One Size Does Not Fit Most

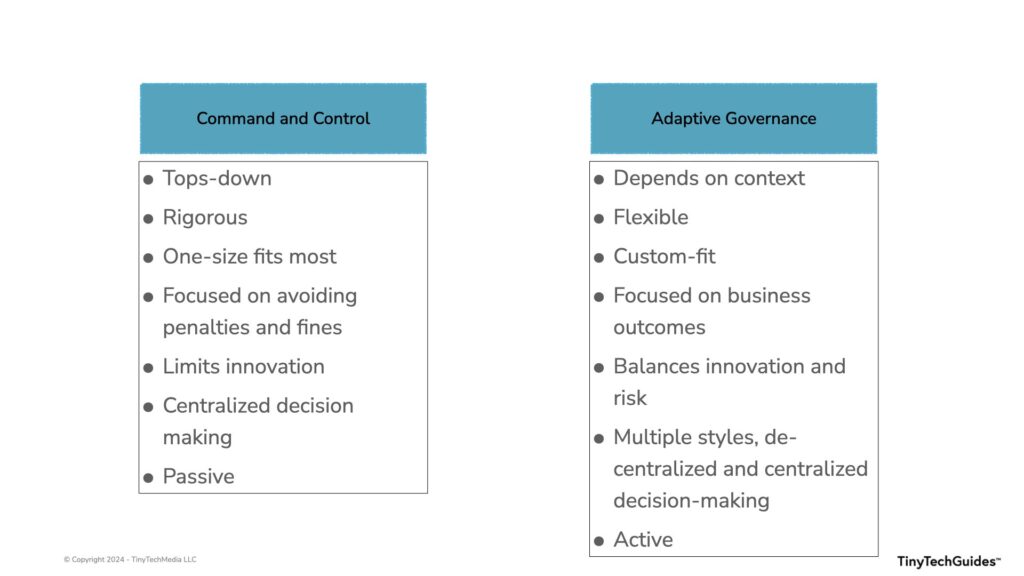

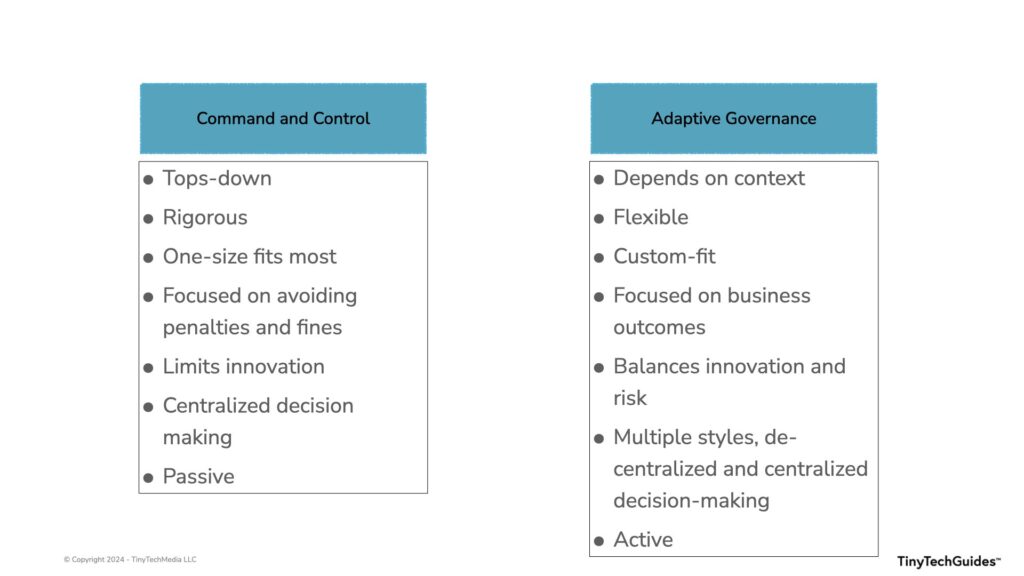

Organizations in highly regulated environments like healthcare, financial services, and insurance have had long-standing governance programs. These programs are in place to ensure that organizations comply with the relevant regulations and policies, thus avoiding stiff penalties and fines. They often take a rigorous, top-down approach to governance to accomplish their objective.

As organizations become more AI-driven, the classic approach to governance simply doesn’t work. Companies will certainly need to adhere to regulations, but with AI, the scope and breadth of the use cases that can be implemented are quite varied. Depending on the use case, companies should take a more flexible and adaptive approach to governance. For example, if I am using AI to grant or deny credit or accept or deny an insurance claim, that should have high governance rigor. If your organization uses AI to determine what marketing offer should be displayed on a website, would that require the same level of rigor? Probably not.

In traditional governance programs, strict rules and processes are in place for data management, access control, and decision-making. However, these rigid structures do not always fit well with AI technologies’ various applications and uses. Due to the wide range of AI use cases, there should be different levels of governance. In other words, the governance should be adaptive to the use case.

“Adaptive governance is a concept from institutional theory that focuses on the evolution of formal and informal institutions for the management and use of shared assets, such as common pool natural resources and environmental assets that provide ecosystem service.”[19]

For our purposes,

Adaptive governance is a decentralized governance construct that applies different governance styles for various use cases and business outcomes.

Figure 1.3: Comparing Command and Control and Adaptive Governance

Current governance models tend to be uniform and top-down, emphasizing control and compliance while stifling innovation. Decision-making rights are often unclear and detached from local decision-making. The future of governance should embrace diverse, context-specific approaches that encourage organizational innovation. A flexible strategy is vital, spanning the enterprise and ecosystem. Decision rights must be decentralized, covering formal and informal structures, and linked to business outcomes and value creation. Proactive governance should anticipate opportunities and risks, rather than solely focusing on compliance.

Practical Advice and Next Steps

- Establish a Multidisciplinary AI Governance Committee: Form a team that includes members from IT, legal, compliance, and business units. This committee should meet regularly to discuss the evolving landscape of AI governance and ensure that the organization’s AI initiatives align with current ethical standards and anticipated regulatory changes. They will be responsible for translating AI governance frameworks into practice and overseeing the implementation of these policies.

- Conduct a Risk-Benefit Analysis for AI Applications: Assess each AI application’s risks and benefits to determine the appropriate level of governance. For high-stakes AI applications, such as those affecting credit or insurance decisions, apply stringent governance controls. For lower-stakes scenarios, like marketing content generation, adopt a lighter governance touch that maintains ethical standards.

- Implement Continuous AI Ethics Training: Develop a training program for all AI development and deployment employees to ensure they are aware of ethical considerations, company policies, and legal requirements. This training should be updated regularly to reflect the latest developments in AI ethics and governance, fostering a culture of ethical AI use within the organization.

Summary

AI governance is no longer just about compliance—it’s a strategic imperative that can set companies apart in the digital marketplace. Organizations can mitigate risks, foster innovation, and build customer trust by embracing a holistic and adaptive approach to AI governance. By taking an adaptive approach, your organization can transcend the confines of a traditional, compliance-based approach and create a competitive edge.

As the proliferation of regulations continues to multiply, companies must take a proactive stance to prepare for future regulations. This means staying informed of upcoming changes, engaging in policy discussions, and anticipating the impact of new laws on current and future AI initiatives. By preparing for these changes now, businesses can ensure they are not caught off-guard and can continue to leverage AI governance as a lever for differentiation. It is this forward-looking readiness that will enable enterprises to retain consumer trust.

Adaptive governance becomes essential as AI technology becomes increasingly embedded in business processes. Tailoring governance to fit specific use cases enables organizations to be agile and responsive to the unique demands of different AI applications, maximizing value while upholding ethical standards. After all, you don’t want your organization associated with the confabulating chatbots, do you? Time to book my next flight.

If you enjoyed this article, please like it, highlight interesting sections, and share comments. Consider following me on Medium and LinkedIn.

If you’ve enjoyed this article, please consider purchasing my latest TinyTechGuide:

Generative AI Business Applications: An Exec Guide with Life Examples and Case Studies.

If you’re interested in this topic, consider TinyTechGuides’ latest books, including The CIO’s Guide to Adopting Generative AI: Five Keys to Success, Mastering the Modern Data Stack, or Artificial Intelligence: An Executive Guide to Make AI Work for Your Business.

[1] Sweenor, David. 2024. “The Illusion of Control: Generative AI and the CIO’s Dilemma.” Medium. January 28, 2024. https://medium.com/@davidsweenor/the-illusion-of-control-generative-ai-and-the-cios-dilemma-3a588cd7e58c.

[2] Belanger, Ashley. 2024. “Air Canada Has to Honor a Refund Policy Its Chatbot Made Up.” Wired. February 17, 2024. https://www.wired.com/story/air-canada-chatbot-refund-policy/.

[3] Sweenor, David. 2023. “The 12 Hidden Risks of ChatGPT and Generative AI.” Medium. December 16, 2023. https://medium.com/@davidsweenor/the-12-hidden-risks-of-chatgpt-and-generative-ai-e7bb1ec45f00.

[4] Lenthang, Marlene, Jay Blackman, and Rob Wile. 2024. “Boeing Ousts Head of 737 Max Program in Management Shake-Up.” NBC News. February 21, 2024. https://www.nbcnews.com/news/us-news/boeing-ousts-head-737-max-program-management-shake-rcna139831.

[5] Murtell, Jennifer. “Does the World Really Need More Brands?” American Marketing Association. April 11, 2022. https://www.ama.org/marketing-news/does-the-world-really-need-more-brands/

[6] ———. 2024. “The Illusion of Control: Generative AI and the CIO’s Dilemma.” Medium. January 28, 2024. https://medium.com/@davidsweenor/the-illusion-of-control-generative-ai-and-the-cios-dilemma-3a588cd7e58c.

[7] Google. n.d. “Google Responsible AI Practices.” Google AI. https://ai.google/responsibility/responsible-ai-practices/.

[8] Microsoft. n.d. “Responsible AI Principles from Microsoft.” Microsoft. https://www.microsoft.com/en-us/ai/responsible-ai.

[9] “Responsible AI – Building AI Responsibly at AWS – AWS.” n.d. Amazon Web Services, Inc. https://aws.amazon.com/machine-learning/responsible-ai/.

[10] “Our Conduct | HSBC Holdings Plc.” n.d. HSBC. https://www.hsbc.com/who-we-are/esg-and-responsible-business/our-conduct.

[11] “Research Agenda.” n.d. Www.jpmorgan.com. https://www.jpmorgan.com/technology/artificial-intelligence/research-agenda.

[12] Khanna, Samiha. 2023. “Mayo Clinic to Deploy and Test Microsoft Generative AI Tools.” Mayo Clinic News Network. September 28, 2023. https://newsnetwork.mayoclinic.org/discussion/mayo-clinic-to-deploy-and-test-microsoft-generative-ai-tools/.

[13] Sweenor, David. 2023a. “Regulating Generative AI.” Medium. August 8, 2023. https://medium.com/towards-data-science/regulating-generative-ai-e8b22525d71a.

[14] ———. 2023b. “Decoding the AI Bill of Rights.” Medium. October 21, 2023. https://medium.com/@davidsweenor/decoding-the-ai-bill-of-rights-e5213a609abe.

[15] ———. 2023c. “Generative AI’s Powers and Perils: How Biden’s Executive Order Is Reshaping the Tech Landscape.” Medium. December 2, 2023. https://medium.com/@davidsweenor/generative-ais-powers-and-perils-how-biden-s-executive-order-is-reshaping-the-tech-landscape-26b520f2604f.

[16] congress. 2023. “Congress.gov | Library of Congress.” Congress.gov. 2023. https://www.congress.gov/.

[17] “Artificial Intelligence 2023 Legislation.” 2024. Www.ncsl.org. January 12, 2024. https://www.ncsl.org/technology-and-communication/artificial-intelligence-2023-legislation.

[18] Whyman, Bill. 2023. “AI Regulation Is Coming- What Is the Likely Outcome?” Www.csis.org. October 10, 2023. https://www.csis.org/blogs/strategic-technologies-blog/ai-regulation-coming-what-likely-outcome.

[19] Hatfield-Dodds, Steve, Rohan Nelson, and David Cook. 2007. “Adaptive Governance: An Introduction, and Implications for Public Policy.” https://core.ac.uk/download/pdf/6418177.pdf.