Understanding and Mitigating Model Collapse

Introduction

Imagine if you could clone yourself to be in multiple places at once, handling all your responsibilities effortlessly. Remember the sci-fi comedy film Multiplicity (circa 1996), where Doug Kinney (played by Michael Keaton), clones himself to manage his work and personal life. However, as more Dougs are created, each subsequent clone exhibits exaggerated traits and diminished intelligence compared to the previous version. The clones, initially created to reduce chaos, end up creating more confusion and entropy in Kinney’s life.

In the world of artificial intelligence (AI), a similar phenomenon occurs when large language models (LLMs) are trained on data generated by earlier versions of themselves. Just like the clones in Multiplicity, the AI models begin to lose touch with the original data distribution, leading to increased chaos and confusion–a kind of entropy in the AI world known as “model collapse”.

The Phenomenon of Model Collapse

Just like Doug in Multiplicity who faces chaos as he creates more clones, AI models face a similar fate when they are recursively trained on data generated by earlier versions of themselves. They become dumber and more exaggerated over time.

What is Model Collapse?

Model collapse refers to a degenerative process where, over time, AI models lose information about the original content (data) distribution. As AI models are trained on data generated by their predecessors, they begin to “forget” the true underlying data distribution, leading to a narrowing of their generative capabilities. This is akin to the clones in Multiplicity becoming less intelligent and more exaggerated with each generation.

Although the technical explanation of this is beyond the scope of this blog, you may notice this in some AI image generators–when they start to produce nearly identical images, it is likely that the model has collapsed. Perhaps a more familiar example is with AI generated news sites, reviews and content farms. These sites are essentially automatically generating factually inaccurate articles and have the ability to spread misinformation at an alarming rate.[1] Now, some of this may be related to AI hallucinations but it’s also highly likely that these AI content generators are scraping articles from other AI generated articles and re-writing them automatically. Many of them are instantly recognizable–they’re typically full of ads and pop-ups with little to no meaningful content.

This is akin to the clones in Multiplicity becoming less intelligent and more exaggerated with each generation.

How Does it Happen?

Model collapse can occur due to many factors such as lack of diversity in the training data, amplification of biases and model overfitting. When an AI model is trained on AI-generated data, it is essentially learning from a reflection of itself. This reflection, much like a game of ‘telephone’, becomes more distorted with each iteration.

When we train AI on AI, it becomes dumber and dumber.

For example, take this photo of a surfer.

Here is one of the four descriptions Midjourney created from the photo:

“statue of lei wearing surfer in honolulu, hawaii, in the style of light bronze and pink, frank frazetta, traditional arts of africa, oceania, and the americas, symmetrical arrangements, twisted branches, street art aesthetic, narrative-driven visual storytelling –ar 4:3”

Midjourney

Here are four AI generated versions of my photo:

Yes, these are quite pink but the first one looks closest to the original and I had no idea who Frank Frazetta was but I then asked it to describe that image and simply took the first one.

“a statue for a surfer on top of a pink surfboard among some flowers, in the style of ray tracing, monochromatic compositions, reefwave, low-angle shots, flamboyant, vibrant street scenes, rtx on –ar 77:58”

Midjourney

Using the above as a description, the four images below were generated.

Now these are quite interesting but do not seem to represent the original in any way shape or form. That was only two generations removed from the original…what happens if we did this, 100, 1000, or 10,000 times? Now, this is not a perfect example of degenerative learning but rather, an example of AI entropy. The system tends towards a state of more and more disorder.

Insights from the Smart People

A research paper titled “The Curse of Recursion:Training Data on Generated Data Makes Models Forget” the technical aspects of model collapse are discussed. The authors demonstrate that it can happen across all models, not just generative AI models.

Models Become Dumber (Degenerative Learning)

One of the critical insights from the research is the concept of “degenerative learning”. In the context of AI models, degenerative learning refers to the process where, over time, the models lose their ability to accurately represent the diversity and complexity of the original data distribution.

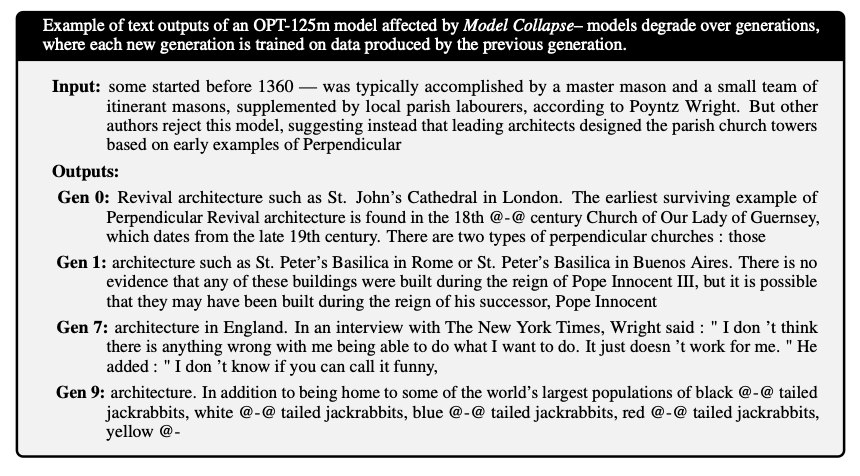

The authors cited the following example:

As you can see, given some input text, if you train each model on data produced from previous generations, it becomes nonsensical.

This happens for several reasons including:

- Loss of Rare Events: As models are trained on data generated by previous versions of themselves, they tend to focus on the most common patterns and start forgetting rare or improbable events. This is akin to the models losing their “long-term memory” –similar to Doug in Multiplicity. Oftentimes, rare events are important singles in the data–whether they represent anomalies in manufacturing processes or fraudulent transactions. Rare events are important to understand and maintain. For example, a common practice in text analytics projects is to remove “junk” words–these might be pronouns, definite and indefinite articles, and so forth. However, for fraud use cases–it is the pronouns that are the signal for fraud. Fraudsters tend to speak in the third person rather than the first.

- Amplification of Biases: Each iteration of training on AI-generated data can amplify existing biases. Since the model’s output is based on the data it was trained on, any bias in the training data can be reinforced and exaggerated over time–also similar to the multiple Dougs. We’ve already seen the amplification of biases in the traditional AI world which has led to discriminatory hiring, racial bias with healthcare or discriminatory tweets. We need to have controls in place to detect and mitigate their perpetuation.

- Narrowing of Generative Capabilities: The generative capabilities of the model begin to narrow as it becomes more influenced by its own projections of reality. The model starts producing content that is increasingly homogeneous and less representative of the diversity and rare events found in the original data. As everything begins to regress to the mean and a state of homogeneity, this will lead to a loss of originality (we already see it on recipe websites). For LLMs, it’s the variation that give each writer or artist their particular tone and style.

- Functional Approximation Error: The paper mentions that functional approximation error can occur if the function approximators are insufficiently expressive. This error can be minimized by using more expressive models, but too much expressiveness can compound noise and lead to overfitting.

Degenerative learning is characterized as a vicious cycle where the model’s ability to learn and represent data accurately deteriorates with each iteration of training on AI-generated content. This has significant implications for the quality and reliability of the content generated by AI models.

Implications of Model Collapse

Understanding the phenomenon of model collapse is interesting, but it is equally important to recognize its implications. Model collapse can have far-reaching consequences, affecting the quality, reliability, and fairness of AI-generated content. If not properly accounted for, your organization could be at risk.

Quality and Reliability

As AI models undergo degenerative learning, the quality and reliability of the content they generate can significantly deteriorate. This is because the models lose touch with the original data distribution and become more influenced by their own projections of reality. For instance, an AI model used for generating news articles might start producing content that is not factually accurate, overly homogeneous or simply fake news!

Fairness and Representation

Model collapse can have serious implications for fairness and representation. As models forget rare events and their generative capabilities narrow, content related to marginalized communities or less common topics may be underrepresented or misrepresented. This can perpetuate biases and stereotypes, and contribute to the exclusion of certain voices and perspectives.

Ethical Concerns

The ethical concerns surrounding model collapse are significant. When AI-generated content is used in decision-making, education, or information dissemination, the integrity of the content is paramount. Model collapse can lead to the dissemination of biased, inaccurate, or homogenized content, which can have ethical implications, especially if it affects people’s lives, opinions, or access to opportunities.

Economic and Social Impact

On an economic and social level, model collapse can affect the trust and adoption of AI technologies. If businesses and consumers cannot rely on the content generated by AI models, they may be less likely to adopt these technologies. This can have economic implications for industries that heavily rely on AI, and social implications in terms of public perception and trust in AI.

Strategies for Mitigating Model Collapse

Model collapse, with its far-reaching implications, necessitates the development of strategies to mitigate its effects. Here are some strategies that can be employed to prevent or mitigate model collapse in AI systems:

Retaining Original Human-Produced Datasets

One of the key insights from the research paper is the importance of retaining a copy of the original human-produced dataset. Periodically retraining the model on this data can help ensure that the model remains grounded in reality and continues to represent the diversity and complexity of human experiences. A recent research paper from Microsoft Research suggested that training LLMs on trusted data like textbooks may help improve the accuracy of LLMs.

Introducing New Human-Generated Datasets

In addition to retaining original datasets, introducing new, clean, human-generated datasets into the training process is beneficial. This can help in preventing the model from narrowing its generative capabilities and ensure that it continues to learn and adapt to new information. As companies begin fine-tuning LLMs on their proprietary corporate data, this may help keep LLMs from degrading.

Monitoring and Regular Evaluation

Regularly monitoring and evaluating the performance of AI models is crucial. By setting up evaluation metrics and benchmarks, it is possible to detect early signs of model collapse. This allows for timely interventions, such as adjusting the training data or tuning the model parameters. This is no different from our traditional guidance on model monitoring, companies need to implement a MLOps framework to continually monitor the models and data for drift. Not only do they need to detect this, they’ll need additional mechanisms to ensure that models are not hallucinating and are producing results that are in alignment with the company’s goals which will be a new capability for many organizations.

Diversifying Training Data

Ensuring that the training data is diverse and representative of different perspectives and experiences can help in preventing biases and ensuring fairness in AI-generated content. This includes ensuring representation of underrepresented communities and rare events. This goes without saying, organizations need to understand the source data that was used to train the model to ensure that it aligns with reality and represents the best of what society could be. Blindly using internet data which is full of negativity, bias and misinformation is a recipe for disaster.

Community Coordination and Collaboration

Model collapse is not just a technical challenge but also an ethical and societal one. Community-wide coordination involving AI companies, content producers, researchers, and policymakers is essential. Sharing information, best practices, and collaborating on developing standards and guidelines can be instrumental in addressing model collapse. Although guidelines and frameworks are good, similar to the United Nations AI Ethics Framework, enforcement and buy-in across geopolitical boundaries will be challenging.

Summary

In Multiplicity, Doug’s attempt to clone himself to manage his responsibilities leads to unintended chaos and entropy. This scenario finds a parallel in the world of AI, where training models on AI-generated data can lead to a form of entropy known as model collapse.

Just as the clones in the movie become dumber and more chaotic with each generation, AI models can lose their ability to accurately represent the diversity and complexity of the original data as they train on their own outputs.

Model collapse, akin to the entropy in Multiplicity, has far-reaching implications for the quality, reliability, and fairness of AI-generated content. It is a reminder that unchecked replication, whether it be clones in a movie or AI training on its own data, can lead to a loss of information and an increase in disorder.

However, unlike the uncontrolled cloning in Multiplicity, we have the tools and knowledge to manage and mitigate model collapse in AI systems. By retaining original human-produced datasets, diversifying training data, regularly monitoring AI models, and fostering community coordination, we can counteract the entropy and ensure that AI remains a reliable and beneficial tool.

As AI continues to evolve, it is imperative to remember the lessons from Multiplicity, entropy and the research on model collapse. Through collective efforts, we can practice AI responsibly, ensuring that it remains grounded in reality and serves the diverse needs of all communities, without descending into chaos.

In essence, by actively managing the ‘cloning process’ of AI data and being mindful of the entropy it can create, we can steer AI development in a direction that is both innovative and responsible.

[1] Thompson, Stuart A. 2023. “A.I.-Generated Content Discovered on News Sites, Content Farms and Product Reviews.” The New York Times, May 19, 2023, sec. Technology. https://www.nytimes.com/2023/05/19/technology/ai-generated-content-discovered-on-news-sites-content-farms-and-product-reviews.html.

Categories

- AI Trends (8)

- Analytics Leadership (10)

- Artificial Intelligence (52)

- Data Faces Podcast (5)

- Data Leadership (3)

- Marketing (18)

- Modern Data Stack (3)

- News (8)

- Tech News (1)

Latest Posts

- Stop Chasing Hallucinations—Focus on Agentic Quality

- Gartner’s Four-Box Foreteller of Fortunes (and FUD)

- From “AI-Ready” to AI Reality: Why Actionable Data Strategies Beat Endless Planning

- Team Dynamics Over Technology: The Human Elements that Drive AI Success

- The Customer Hero Principle: Why Your B2B Messaging Falls Flat